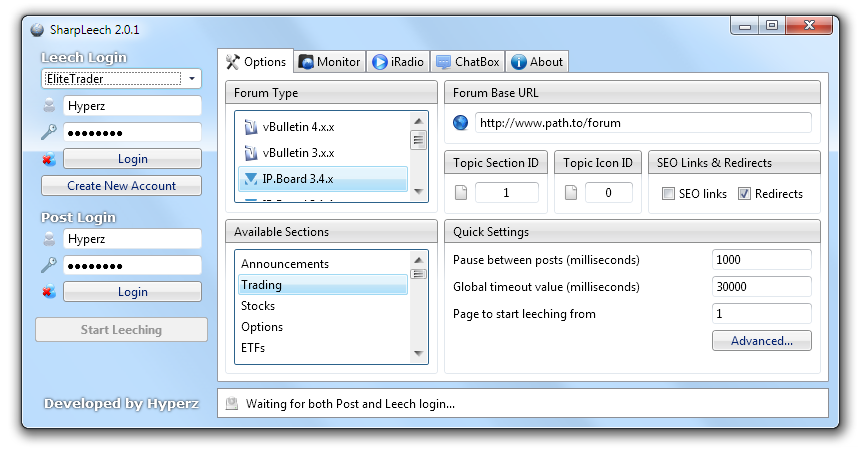

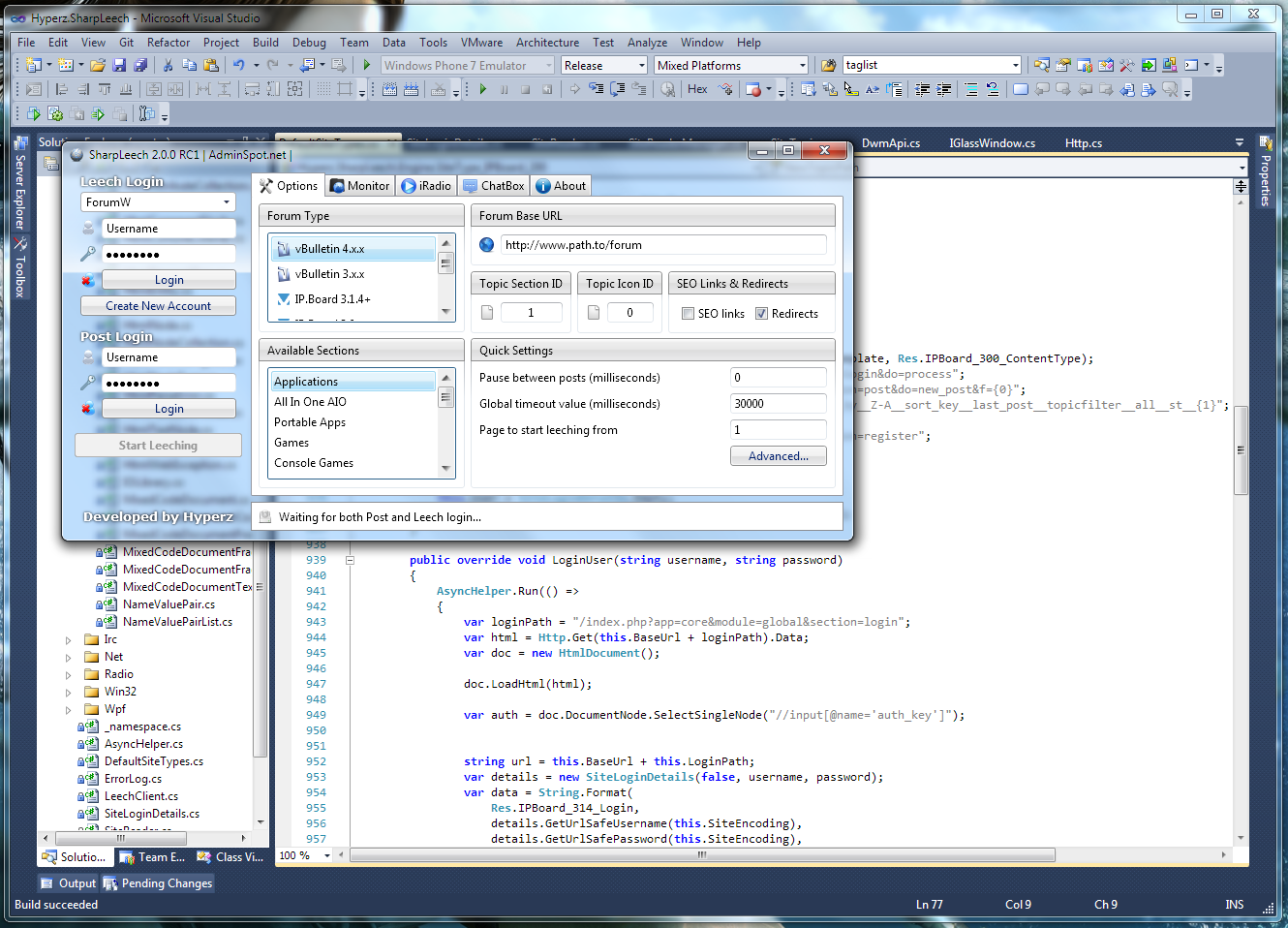

Was just passing by and saw deeTrix's ARTLeech topic which reminded me that I still had an old project laying around that was collecting dust. Since I abandoned it ages ago and usually mess with C++/Qt these days I figured I might as well release the source code so others can benefit, use, and/or learn from it. So, enjoy  .

.

Repo:

https://github.com/Hyperz/SharpLeech

Releases:

https://github.com/Hyperz/SharpLeech/releases

Notes:

Repo:

https://github.com/Hyperz/SharpLeech

Releases:

https://github.com/Hyperz/SharpLeech/releases

Notes:

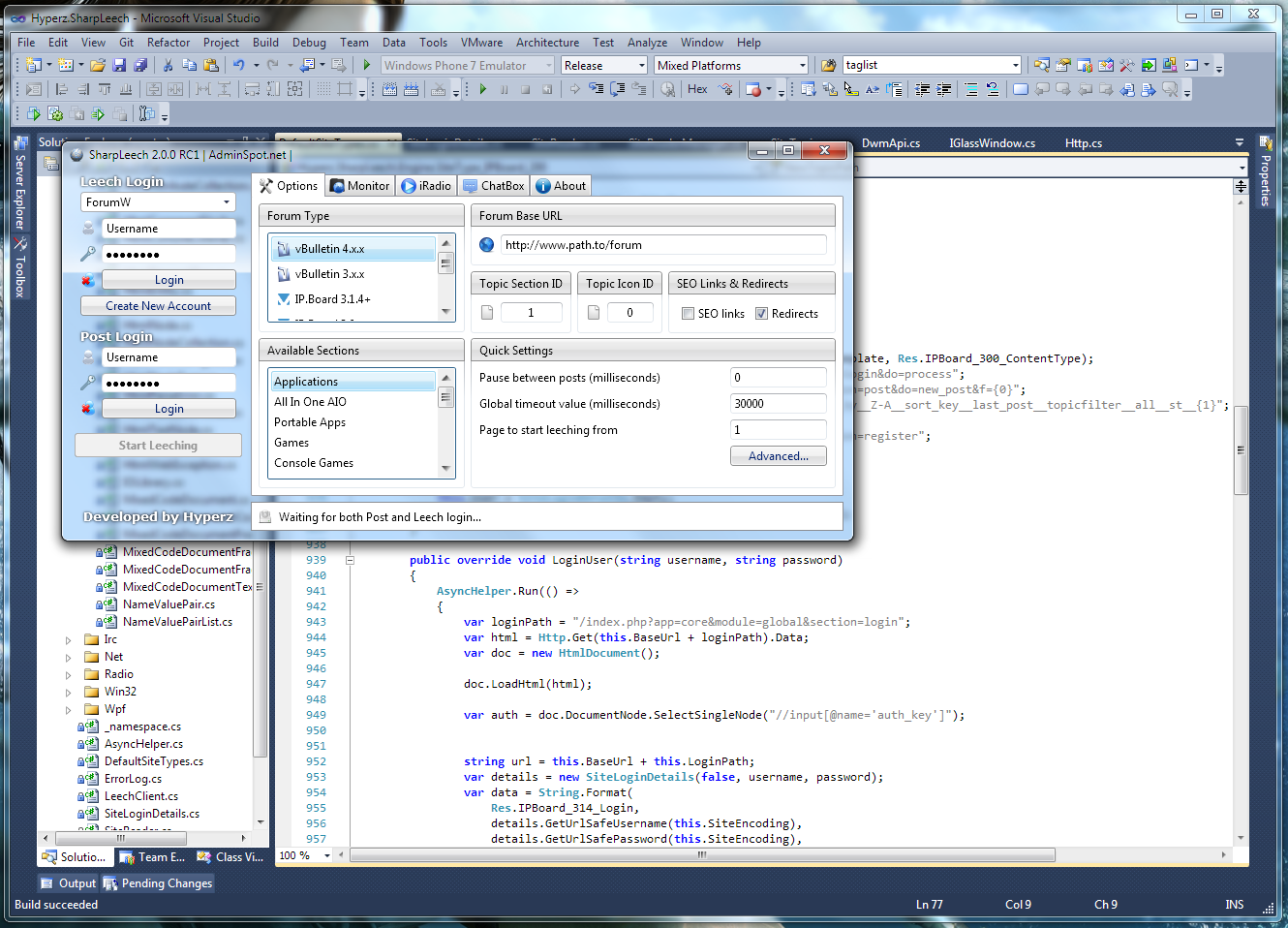

- The code is OLD and a lot of it wasn't written "by the book" so don't expect proper design patterns.

- Support for newer forum types can be added trough DefaultSiteTypes.cs in the Engine project.

- Even though it's old, AFIAK it is still the fastest and most feature rich forum leecher. All that it really needs is support for newer forum software.

- Don't count on me for support, this project is abandoned.

Last edited: